STAC Geoparquet

How to access terrabyte data via STAC Geoparquet and DuckDB

Besides the STAC API (https://stac.terrabyte.lrz.de/public/api) we have made an export of the STAC Items for each collection as Geoparquet files available. You can query those Geoparquet files with the in-memory database DuckDB. Without interacting with the terrabyte STAC API you can query and filter STAC items with your own computing resources.

Please note: The Geoparquet files are exported on a monthly basis from the STAC API database. Thus, you will not find recent data available. For those you need to use still the STAC API.

Available collections and their Geoparquet files

- Sentinel-1 GRD:

/dss/dsstbyfs03/pn56su/pn56su-dss-0022/Sentinel-1/GRD/geoparquet/*.parquet

- Sentinel-1 SLC:

/dss/dsstbyfs03/pn56su/pn56su-dss-0022/Sentinel-1/SLC/geoparquet/*.parquet

- Sentinel-2 L1C Collection 1:

/dss/dsstbyfs01/pn56su/pn56su-dss-0008/Sentinel-2-Col-1/L1C/geoparquet/*.parquet

- Sentinel-2 L2A Collection 1:

/dss/dsstbyfs01/pn56su/pn56su-dss-0008/Sentinel-2-Col-1/L2A/geoparquet/*.parquet

- Sentinel-3 OLCI L1 EFR:

/dss/dsstbyfs01/pn56su/pn56su-dss-0008/Sentinel-3/OLCI/OL_1_EFR___/geoparquet/*.parquet

- Landsat TM Collection 2 L2A:

/dss/dsstbyfs01/pn56su/pn56su-dss-0008/Landsat/collection-2/level-2/standard/tm/geoparquet/*.parquet

- Landsat ETM Collection 2 L2A:

/dss/dsstbyfs01/pn56su/pn56su-dss-0008/Landsat/collection-2/level-2/standard/etm/geoparquet/*.parquet

- Landsat OT Collection 2 L2A:

/dss/dsstbyfs01/pn56su/pn56su-dss-0008/Landsat/collection-2/level-2/standard/oli-tirs/geoparquet/*.parquet

Installation of DuckDB

DuckDB provides different options for installation:

- Command line via binary download:

wget https://github.com/duckdb/duckdb/releases/download/v1.0.0/duckdb_cli-linux-amd64.zip

- Command line via Micromamba:

micromamba install duckdb -c conda-forge

- Python via Micromamba:

micromamba install python-duckdb -c conda-forge

- Python via Pip:

pip install duckdb

Further documentation: https://duckdb.org/docs/installation/

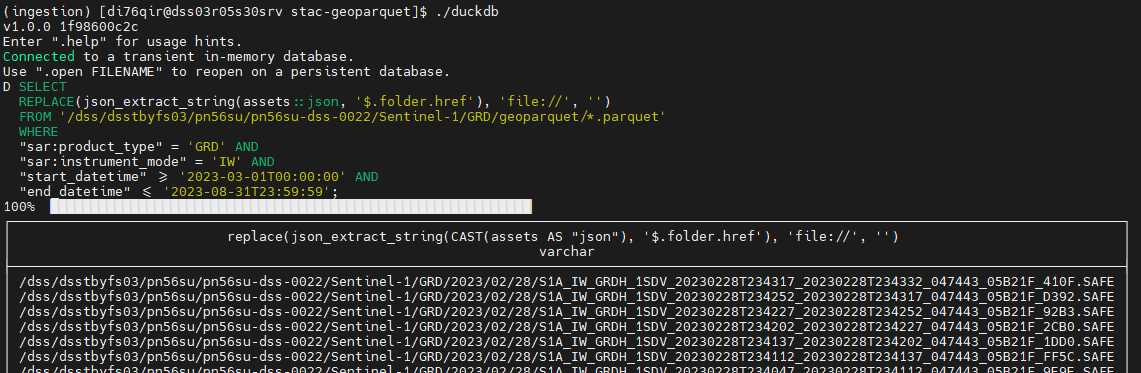

Using DuckDB on the command line

- Run

duckdbcommand

./duckdb

- Run query within terminal

SELECT

REPLACE(json_extract_string(assets::json, '$.folder.href'), 'file://', '')

FROM '/dss/dsstbyfs03/pn56su/pn56su-dss-0022/Sentinel-1/GRD/geoparquet/*.parquet'

WHERE

"sar:product_type" = 'GRD' AND

"sar:instrument_mode" = 'IW' AND

"start_datetime" >= '2023-03-01T00:00:00' AND

"end_datetime" <= '2023-08-31T23:59:59'

Using DuckDB within Python

An example as Jupyter Notebook is available here:

- https://github.com/DLR-terrabyte/eo-examples/blob/main/quickstarts/Search-STAC-Geoparquet.ipynb

- https://nbviewer.org/github/DLR-terrabyte/eo-examples/blob/main/quickstarts/Search-STAC-Geoparquet.ipynb

Similarly to the duckdb command line interface, you can import the duckdb library in Python and conduct queries.

import duckdb

# Query Geoparquet files

result = duckdb.query("SELECT count(id) FROM '/dss/dsstbyfs03/pn56su/pn56su-dss-0022/Sentinel-1/GRD/geoparquet/*.parquet';")

# Convert result into Pandas dataframe

df = result.df()

DuckDB Spatial Extension

If you want to conduct spatial operations within your SQL query (e.g., intersects), you need to install and load the spatial extension (in both, command line and Python)

import duckdb

duckdb.install_extension("spatial")

duckdb.load_extension("spatial")

Supported spatial operations are listed in the official documentation: https://duckdb.org/docs/extensions/spatial.html

Converting DuckDB results into STAC items

Results from the DuckDB query can be converted back into STAC items to be used further with any STAC item compliant software (e.g., odc-stac). Therefore, we make use of the stac_table_to_items function of the stac-geoparquet library.

import json

import pystac

import duckdb

from stac_geoparquet.arrow._api import stac_table_to_items

geoparquet = '/dss/dsstbyfs01/pn56su/pn56su-dss-0008/Sentinel-2-Col-1/L2A/geoparquet/*.parquet'

sql_where = '((("eo:cloud_cover" BETWEEN 0 AND 21) AND ("datetime" BETWEEN \'2023-02-01T00:00:00Z\' AND \'2023-02-28T23:59:59Z\')))'

sql_query = f"SELECT * FROM read_parquet('{geoparquet}', union_by_name=False) WHERE {sql_where}"

db = duckdb.query(sql_query)

## Convert DuckDB result to Arrow table

table = db.fetch_arrow_table()

## Convert Arrow table to List of PyStac-Items

items = []

for item in stac_table_to_items(table):

item['assets'] = json.loads(item['assets'])

items.append(pystac.Item.from_dict(item))

The full example is here: https://nbviewer.org/github/DLR-terrabyte/eo-examples/blob/main/quickstarts/Search-STAC-Geoparquet.ipynb

Using pygeofilter to convert your STAC API Query (CQL) to DuckDB

To easily switch between STAC API and Geoparquet files, you can convert the CQL2 format into an SQL format supported by DuckDB. Therefore, we make use of pygeofilter (https://github.com/geopython/pygeofilter) as well as the extension pygeofilter-duckdb (https://github.com/DLR-terrabyte/pygeofilter-duckdb).

# Use pygeofilter library to convert between CQL2-JSON/Text and SQL query

from pygeofilter.parsers.cql2_json import parse as json_parse

from pygeofilter.backends.duckdb import to_sql_where

from pygeofilter.util import IdempotentDict

# Define CQL2-JSON query

start = '2023-02-01T00:00:00Z'

end = '2023-02-28T23:59:59Z'

cql2_filter = {

"op": "and",

"args": [

{

"op": "between",

"args": [

{

"property": "eo:cloud_cover"

},

[0, 21]

]

},

{

"op": "between",

"args": [

{

"property": "datetime"

},

[start, end]

]

},

{

"op": "s_intersects",

"args": [

{ "property": "geometry" } ,

{

"type": "Polygon", # Baden-Württemberg

"coordinates": [[

[7.5113934084, 47.5338000528],

[10.4918239143, 47.5338000528],

[10.4918239143, 49.7913749328],

[7.5113934084, 49.7913749328],

[7.5113934084, 47.5338000528]

]]

}

]

}

]

}

# Convert CQL2-JSON query to DuckDB SQL where clause

sql_where = to_sql_where(json_parse(cql2_filter), IdempotentDict())

The full example is here: https://nbviewer.org/github/DLR-terrabyte/eo-examples/blob/main/quickstarts/Search-STAC-Geoparquet.ipynb