SLURM workload manager

The batch system of the HPDA terrabyte clusters is the open-source workload manager SLURM (Simple Linux Utility for Resource Management). In order to process on the HPDA-terrabyte clusters, you must submit one or more jobs to SLURM. SLURM will find and allocate the resources required for your job (e.g. the compute nodes, cores and RAM to run your job on).

SLURM commands

The following table provides an overview of some useful SLURM commands used to submit and manage jobs. On the system, a man page is also supplied for each command. Since SLURM is a very powerful and comprehensive tool, we just provide some basic insights into SLURM. If you need more detailed information, please visit the official SLURM-Documentation or have a look at this wonderful SLURM-cheat sheet for the most useful commands.

| Command | Description |

|---|---|

| sbatch | Submit a job script (probably the most important SLURM command) |

| salloc | Create an interactive SLURM shell |

| srun | Execute argument command on the resources assigned to a job. Note: Must be executed inside an active job (script or interactive environment) |

| squeue | Print table of submitted jobs and their state. Note: Non-privileged users can only see their own jobs |

| sinfo | Provide overview of cluster status |

| scontrol | Query and modify SLURM jobs |

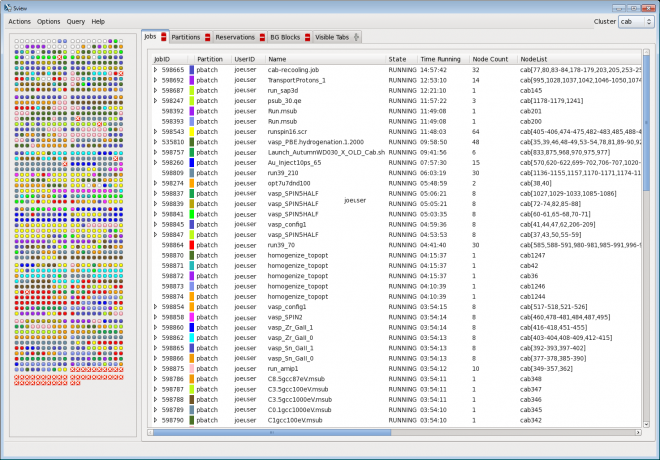

| sview | GUI for viewing and managing resources and jobs (see below) |

| sacct | List information on previous jobs. Example: sacct -X -o JobID,JobName,State,ExitCode,NodeList,AllocTRES,ReqTRES |

SLURM job setup

Specify the amount of required resources wisely! Don't block resources by demanding resources (e.g. amount of cores, RAM, processing time) you don't need. As SLURM takes care of a fair share of resources, small jobs (i.e. jobs that request a small amount of resources for short times) are preferred over large jobs (i.e. jobs that request a large amount of resources for long times) for scheduling in the queue.

Basically, there are two ways to submit processing jobs to SLURM:

- Via the command line, by passing general job control and resource requirement options to the sbatch command. This is most useful for testing purposes. See Interactive jobs (testing) for examples.

- By creating a SLURM job script that contains the general job

control and resource requirement options as comment lines of the

form

#SBATCH <option>=<value>and submitting this job script with sbatch. This is most useful for productive processing. See Script-driven SLURM jobs (productive) for examples.

General options

General options for instance controlling Shell, Execution Path, Mail, Output etc. Note: specifying environment variables in the command section of a SLURM job is not supported.

| Option | Description | Notes |

|---|---|---|

-D <directory> | Start job in specified directory. | If this is not specified, the working directory at submission time will be the starting directory of the job. If the specified directory does not exist, the job will not run. |

--mail-user=<mail_addr> | User's e-mail address. | Obligatory so we can contact you in case of problems. Batch requests which do not specify an e-mail address will not be processed. |

--mail-type=[begin/end/fail/requeue/all/none] | Batch system sends e-mail when [starting/ending/aborting/requeuing] a job. | If all is specified, each state change will produce a mail. If none is specified, no mails will be sent out. |

-J <req_name> | Name of batch request. | Default is the name of the script, or "sbatch" if the script is read from standard input. Please do not use more than 10 characters here! |

-o <filename> | write standard output to specified file. | We recommend specifying the full path name. Default value is slurm-%j.out, where %j stands for the job ID. Apart from %j, the output specification can also contain %N, which is replaced by the master node name the job is initiated on. |

-e <filename> | write standard error to specified file. | Same as above. You can also specify an absolute or relative directory in the filename. However, the directory must exist, otherwise the job will not run. |

--export=NONE | exports designated environment variables into job script | Please use with care: It is recommended to specify NONE and load all needed environment modules in the script. Conversely, if you use "ALL", it is probably a good idea to not load the module environment via /etc/profile.d/modules.sh in the script section. |

| or | ||

--export=ALL | Specifying ALL makes it nearly impossible to debug errors in your script because we cannot reproduce your environment at the time of job | |

| or | ||

--export var1=val1,var2=val2,... | If only the name of a variable is specified, the existing value is used in the SLURM script at run time. For more than one variable, a comma-separated list must be specified. Example: --export PATH,MYVAR=xxyy,LD_LIBRARY_PATH |

Job Control- and Resource Requirement-Options

| --time=xx:yy:zz | Specify limit for job run time as x hours y minutes z seconds. All three fields must be provided. | This is recommended if you want an improved chance of putting a short or medium-length parallel job through the system using the backfill mechanism of the SLURM scheduler. The maximum run time value imposed by LRZ cannot be exceeded using this option. |

| --cpus-per-task=<number> | Defines the number of hyperthreads per (MPI) task or job (in case of serial shared memory jobs). | The minimum is --cpus-per-task=2 (i.e. 2 hyperthreads ≡ 1 physical core), the maximum is --cpus-per-task=160 (i.e. 160 hyperthreads ≡ 80 physical cores ≡ 1 full node). The setting for a Single-core job is --cpus-per-task=2. |

| --gres=gpu:<number of GPUs> | Defines the number of GPUS per (MPI) task or job (in case of serial shared memory jobs). | Only available on hpda2_compute_gpu partition. |

| --mem=<memory in MByte> | Explicit memory requirement on a per-node basis | For Non-MPI jobs, please always specify --mem. Reason: If you only need a small amount of memory, the total job throughput of the cluster may be considerably improved. For Parallel distributed memory jobs (MPI), the total memory available for the job will be the number of nodes multiplied with the --mem value. |

| --clusters=[all | cluster_name] | Specify a cluster to inspect or submit to. You can also use the -M option (with the same modifiers) instead. | Please use this option in scripted batch jobs. A full list of available clusters is provided via sinfo --clusters=all or sinfo -M all |

| --partition=<partition_name> | Select the SLURM partition in which the job shall be executed. | Each SLURM cluster contains one or more partitions (with possibly different resource settings) from which a suitable one should be selected by name. |

| --account=<group-account> | Specify the group account the SLURM job shall run with. This may have impacts on the priority class of the job submission. | If you belong to a terrabyte project, you must specify your group account (which is identical to the project id), in order to benefit from a higher priority in the SLURM-queue: e.g. --account=pn56su. DLR users who got their account through the self-registration portal are in the group hpda-c by default (lowest priority class), but may specify a project id with a higher priority class here as well. |

| --nodes=<number>[-<number>] | Range for number of nodes to be used | Must only be set in case of Parallel distributed memory job (MPI), since the default setting is --nodes=1. |

| --ntasks=<number> | Number of (MPI) tasks to be started | Must only be set in case of Parallel distributed memory job (MPI), since the default setting is --ntasks=1. |

| --ntasks-per-core=<number> | Number of MPI tasks assigned to a physical core | This can be used to overcommit resources. Specifying this will normally be only useful on a system with hyperthreaded cores and sufficient memory per core. Should only be specified in Parallel distributed memory jobs (MPI), if --ntasks >1 is used. |

| --ntasks-per-node=<number> | Number of MPI tasks assigned to a node | This can be used to overcommit resources. Specifying this will normally be only useful on a system with hyperthreaded cores and sufficient memory per core. Should only be specified in Parallel distributed memory jobs (MPI), if --ntasks >1 is used. |

| --exclusive [=user|mcs] | Run job in exclusive mode | This can be used to run jobs on a node without having to share the node with others (e.g. for performance monitoring). The job allocation can not share nodes with other running jobs (or just other users with the "=user" option or groups with the "=mcs" option). If user/mcs are not specified (i.e. the job allocation can not share nodes with other running jobs), the job is allocated all CPUs and GRES on all nodes in the allocation, but is only allocated as much memory as it requested. This is by design to support gang scheduling, because suspended jobs still reside in memory. To request all the memory on a node, use --mem=0. |

| --hold | batch request is kept in User Hold (meaning it is queued but not initiated, because its priority is set to zero) | A held job can be released using scontrol to reset its priority e.g. "scontrol update jobid=<id> priority=1". |

| --overcommit | Overcommit resources. | |

| --requeue / --no-requeue | Identifies the ability of a job to be rerun or not in case of a node failure. If the switch --no-requeue is set, the job will not be rerun. | Default is --requeue |

| --dependency=<dependency_list> | Defer start of job until dependency conditions are fulfilled. The dependency list may be one (or more, via a comma separated list) of the following: after:job_id[:job_id ...] (start up once specified jobs have started execution) afterany:job_id[:job_id ...] (start up once specified jobs have terminated) afternotok:job_id[:job_id ...] (start up once specified jobs have terminated with a failure) afterok:job_id[:job_id ...] (start up once specified jobs have terminated successfully) singleton (start up once any previously submitted job with the same name and job user has terminated) | Example: the command sbatch --dependency=afterok:3712,3722./myjob.cmd will put a job into the queue that will only start if the jobs with ID 3712 and 3722 in the same SLURM cluster have successfully completed. These jobs may even belong to a different user. |

| --cpu-freq=<value> | Specify the core frequency with which a job should execute. The form the value takes is p1[-p2[:p3]] Please consult the sbatch man page for details. | Example: issuing the command sbatch --cpu-freq=1100 ./myjob.cmd will execute the job at 1.1 GHz core frequency if this is supported. Note: unsupported settings will generate an error message, but the job will execute anyway at some other setting. |

| -C (or --constraint=) | Issue a feature request on the nodes of job | Available features depend on the cluster used. Only one -C option should be supplied, but a comma-separated list of entries can be given as an argument. |

Environment Variables

The environment at submission time is exported into the job for interactive jobs, and also into scripted jobs if the --export=ALL option is specified.

The following additional, SLURM-specific variables are available inside a job:

| Slurm Variable Name | Description | Example Value |

|---|---|---|

| $SLURM_JOB_ID | Job ID | 123456 |

| $SLURM_JOB_NAME | Job Name | MyBatchJob |

| $SLURM_SUBMIT_DIR | Submit Directory | /home/ab123456 |

| $SLURM_JOB_NODELIST | Nodes assigned to job | ncm[0001,0004,0074-0080] |

| $SLURM_SUBMIT_HOST | Submitting host | login.terrabyte.lrz.de |

| $SLURM_JOB_NUM_NODES | Number of nodes used by job | 4 |

| $SLURM_NNODES | Same as above | 4 |

| $SLURM_JOB_CPUS_PER_NODE | Number of cores per node | 8,8,8,6 |

| $SLURM_TASKS_PER_NODE | Number of tasks per node | 1,1,1,1 |

| $SLURM_MEM_PER_CPU | Memory per CPU in MB | 3000 |

| $SLURM_NTASKS | Total number of tasks for job | 48 |

| $SLURM_NPROCS | Total number of processes for job | 48 |

Besides these commonly used environment variables, there are many more available. Please consult the SLURM documentation for more information.

SLURM Exit Codes

When a signal was responsible for a job or step's termination, the signal number will be displayed after the exit code, delineated by a colon(:).

Slurm displays a job's exit code in the output of the scontrol show job and the sview utility. Slurm displays job step exit codes in the output of the scontrol show step and the sview utility.

-

For sbatch jobs, the exit code that is captured is the output of the batch script.

-

For salloc jobs, the exit code will be the return value of the exit call that terminates the salloc session.

-

For srun, the exit code will be the return value of the command that srun executes.

-

Exit codes triggered by the user application depend on specific compilers, e.g. List of Intel Fortran Run-Time Error Messages

Details about SLURM exit codes:

General information on SLURM exit codes (SLURM documentation)

List of SLURM exit codes and their meanings

A GUI for job management

The command sview is available to inspect and modify jobs via a graphical user interface:

- To identify your jobs among the many ones in the list, select either the "specific user's jobs" or the "job ID" item from the menu "Actions Y Search"

- By right-clicking on a job of yours and selecting "Edit job" in the context menu, you can obtain a window which allows to modify the job settings. Please be careful about committing your changes.